Using AWS to deploy a Startup project: Part 1 - Frontend

The challenge

Years ago, I joined a startup trying to create a product and find a marketplace for their idea. I joined first as a Frontend developer, and after finishing the first version of the application, I wanted to be more involved as a Backend. So, I swapped roles with the Backend developer.

The application consisted of three services: a backend API, a frontend that was targeted to be deployed also in mobile devices, and an administration panel.

We decided with the CTO at that time that we could deploy our services in AWS, and we could leverage Docker images, so we chose AWS ECS as our deployment target.

We didn’t go with Kubernetes because it seemed like overkill since we had no users at the beginning.

However, we had some specific requirements:

Frontend: Should be cached to the user, and also the user should be able to upload a photo.

Backend API: It should be public, to be consumed by the frontend and mobile application.

Admin: It should be an internal tool. Since we were a small startup at that time, it was okay to share some VPN connections.

For all of them, we required to have a test environment, we named it staging environment.

For storing the domain, we used Route53, and since we had to validate the certificate, we could automate that using DNS validation.

Throughout these examples, I’ll use thestartup.domain as our sample domain name. Keep in mind that these examples may differ slightly from the actual diagrams and implementation, as I’m recreating them from memory.

Why we avoided the AWS UI Console and used Terraform?

We first experimented by creating some resources in the console, but we started to see that relying on memory for modifications was risky, since we could accidentally damage the resources.

Even though we had CloudFormation, I don’t remember which features we were missing at that time, but we decided to go with Terraform first. I only remember the main feature that made us choose it—the Terraform syntax seemed much cleaner and easier compared to CloudFormation’s.

Frontend

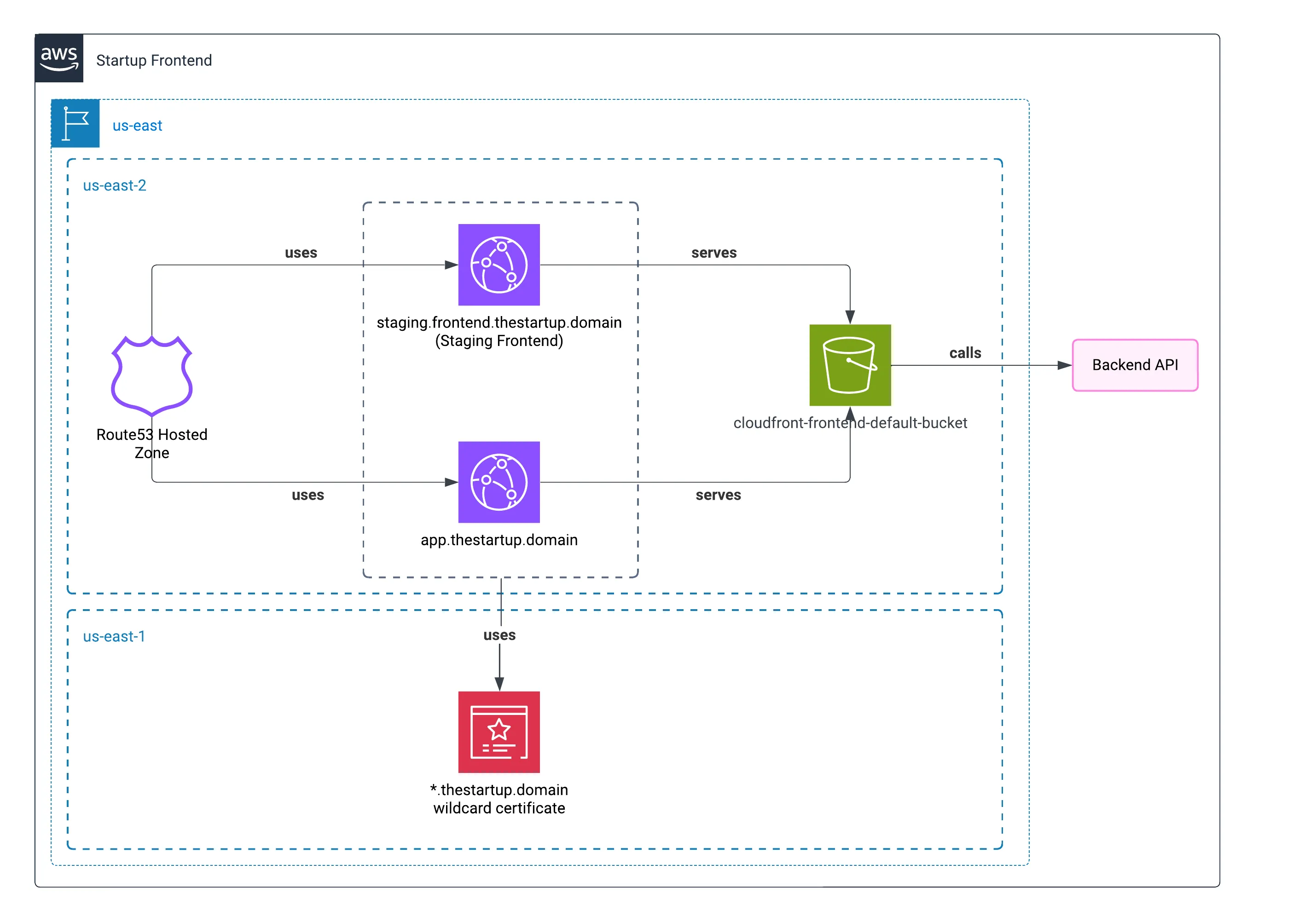

Architecture

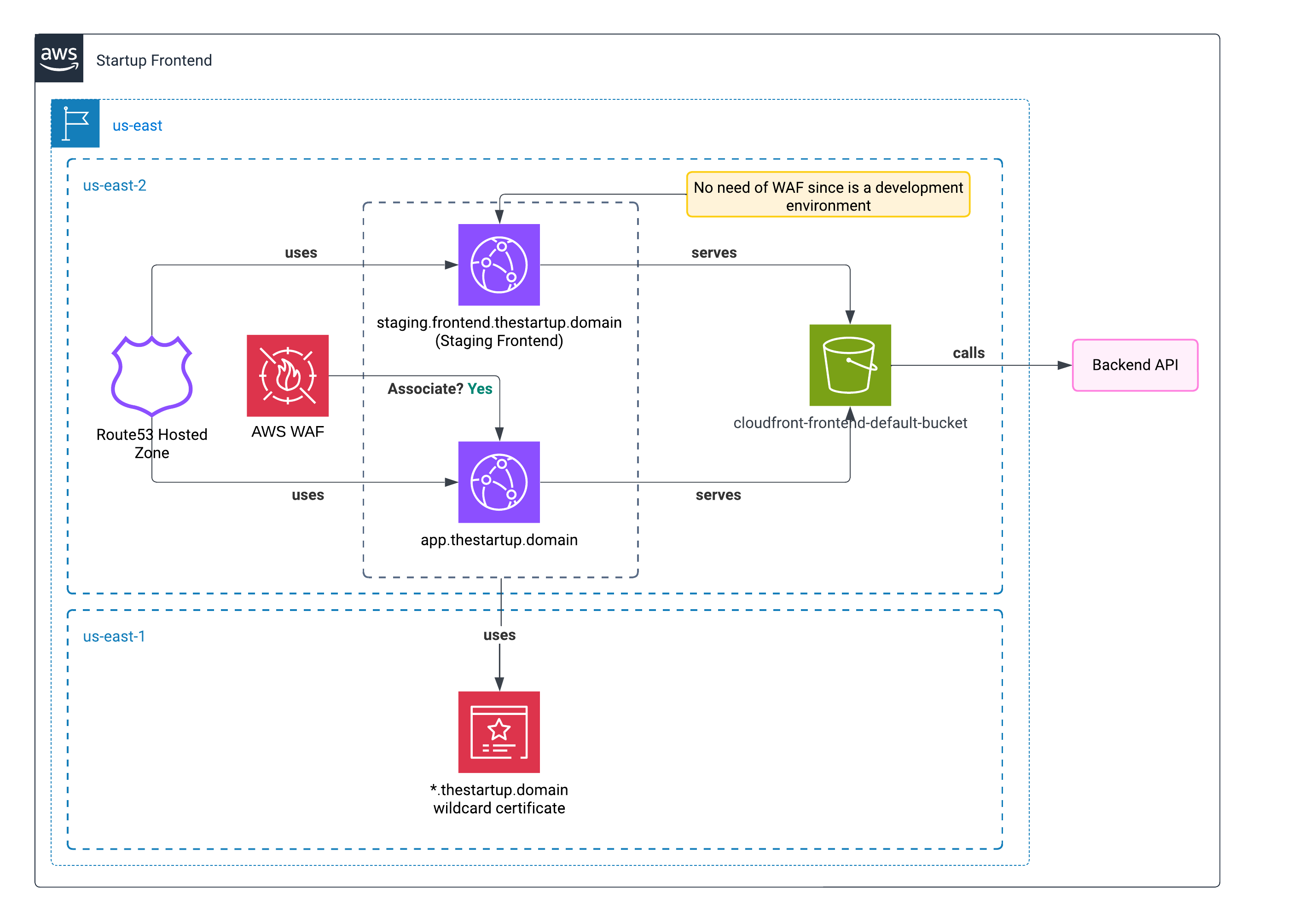

For the frontend, we found that we can use S3 to host a static application. We can use CloudFront connected to AWS Certificate Manager to deploy a full HTTPS static application that will serve HTML. Also, since CloudFront caches the contents in its Edges, it seemed like the most logical option.

Here is the diagram about how we planned hosting the Frontend app.

Two different availability zones

I used two availability zones, since using us-east-1 only is sometimes risky, as all new AWS changes will be launched there first, and these can cause some stability disruptions.

The only service that we couldn’t move was the AWS Certificate Manager (SSL). To validate our domain, it must always be in us-east-1.

Using two domains

The reason why we used two domains to deploy the application, outside of thestartup.domain, was because the main page was a landing page, and we wanted to deploy the real application within a subdomain. As I wrote before, we had to deploy a staging environment that any of our developers could use.

Storing production and staging environments within the same bucket

Of course, we could create a bucket for every project. But to avoid using an extra bucket, we put every project within a different folder.

Also, we enabled the capacity to serve the content of the bucket as a static web page.

But we didn’t want to expose all the bucket contents to the public.

Using Cloudfront to server the bucket content

To take advantage of content caching and to avoid exposing the content, we created two CloudFront distributions with the most affordable tier: PriceClass_100. With this, we made sure that our content would be cached for the public from America (from North to South).

Certificate

Our certificate was a wildcard one; we did this in case we wanted to have more alternative or demo instances of the frontend.

For this case, we used *.frontend.thestartup.domain.

An environment per developer

For a couple of weeks, we experimented with URLs like developerone.frontend.thestartup.domain. However, without proper Git merge policies in place, this approach made code maintenance challenging. Our goal was to create separate frontend environments that would connect to the Staging API.

Even though we considered recreating the same structure by running a small Terraform script for Pull Requests, we discarded this idea since the deployment wait times would have been too long.

It is possible, sure, but an easier way to deploy would be using containers.

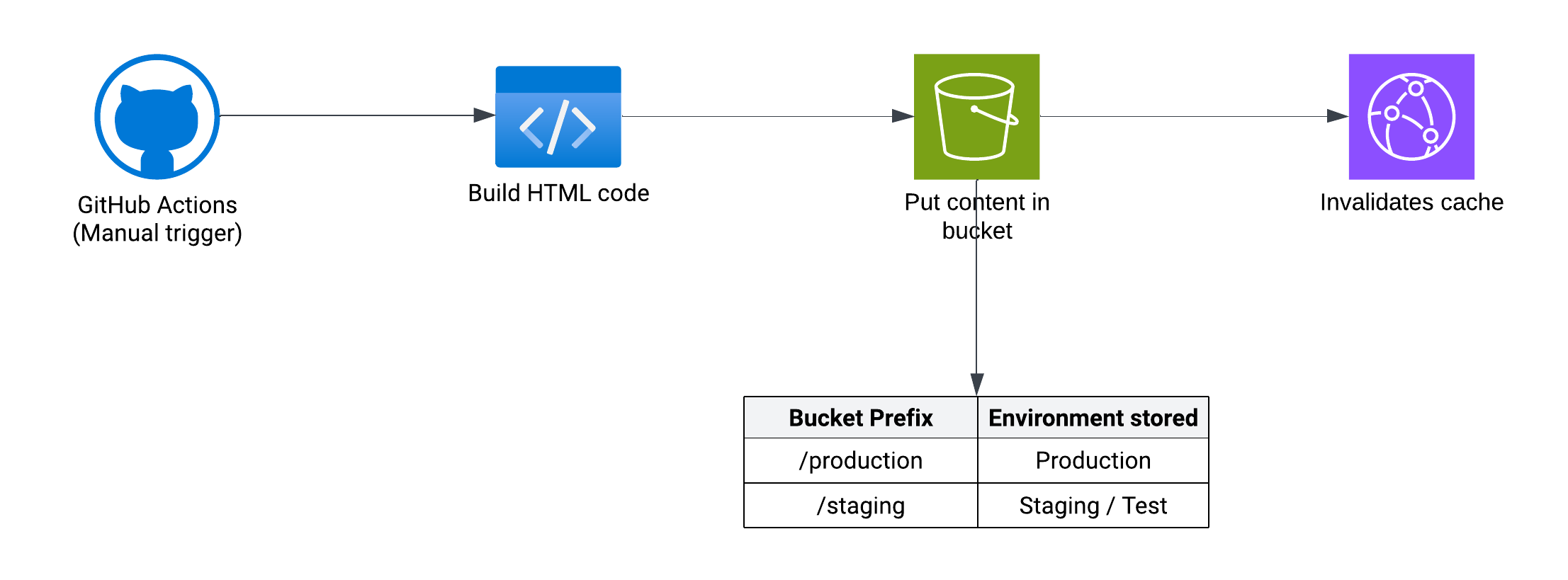

Pipeline to deploy the project

The pipeline basically built the HTML and pushed it to serve as a static site. To avoid being out of the AWS ecosystem, I was using AWS CodeCommit combined with AWS CodeBuild for version one.

But GitHub Actions started to gain some popularity, and that’s when I decided to jump into GitHub Actions, since we had the opportunity to create reports per PR.

Then, in the bucket we had two folders: one named /production and another one with /staging. Before building every project, we had to change the environment variables to target the staging API as well.

Github pipeline

This is a similar way to how we used to deploy the code.

DOMAIN_NAME was changed between environments, since we used to detect which CloudFront distribution we wanted to target to invalidate the cache once we deployed the new content to the bucket.

name: Deploying Production

on: workflow_dispatch

jobs: deploy: runs-on: ubuntu-latest env: NODE_OPTIONS: --max-old-space-size=8192 ENVIRONMENT: production steps: - name: Checkout uses: actions/checkout@v4 - uses: actions/setup-node@v4 with: node-version-file: ".nvmrc" cache: npm - name: Install latest npm version run: npm install -g npm@10 - name: Install JQ run: sudo apt-get install jq - name: Configure AWS credentials uses: aws-actions/configure-aws-credentials@v4 with: aws-access-key-id: ${{ secrets.AWS_FRONTEND_PIPELINE_ID }} aws-secret-access-key: ${{ secrets.AWS_FRONTEND_PIPELINE_KEY }} aws-region: us-east-2 - name: Get and print CloudFront frontend url id: domain-data run: | DOMAIN_CNAME=$(dig -t cname app.thestartup.domain +short) DOMAIN_CLOUDFRONT=${DOMAIN_CNAME%?} echo "::set-output name=domain_name::$DOMAIN_CLOUDFRONT" - name: Installing dependencies run: npm ci - name: Copying env file for production run: npm run prod:config - name: Building frontend run: npm run prod:build - name: Copy files to S3 and invalidating Cloudfront cache env: CLOUDFRONT_DISTRIBUTION: ${{ steps.domain-data.outputs.domain_name }} run: | CLOUDFRONT_ID_RAW=$(aws cloudfront list-distributions|jq '.DistributionList.Items[] | select(.DomainName=="'$CLOUDFRONT_DISTRIBUTION'") | .Id') CLOUDFRONT_ID="${CLOUDFRONT_ID_RAW//\"}" aws s3 sync ./www s3://cloudfront-frontend-default-bucket/$ENVIRONMENT --delete aws cloudfront create-invalidation --distribution-id $CLOUDFRONT_ID --paths '/*'What I will improve nowadays

Has it ever happened that you reviewed code you wrote years ago and felt ashamed of it? That’s totally normal. We, as developers, once we grow in knowledge, start seeing our past mistakes and think about how they could have been avoided. That’s why I created a small list of improvements that I would make if I had to create the same environment nowadays.

Adding WAF

Since security is really important nowadays, adding WAF will be a no-brainer for me. AWS WAF adds site protection against SQL injections and cross-site scripting (XSS). It also adds a layer of protection against bots. In the current world, bots will keep checking your website repeatedly, looking for vulnerabilities, or they will keep scraping your site, consuming resources and memory.

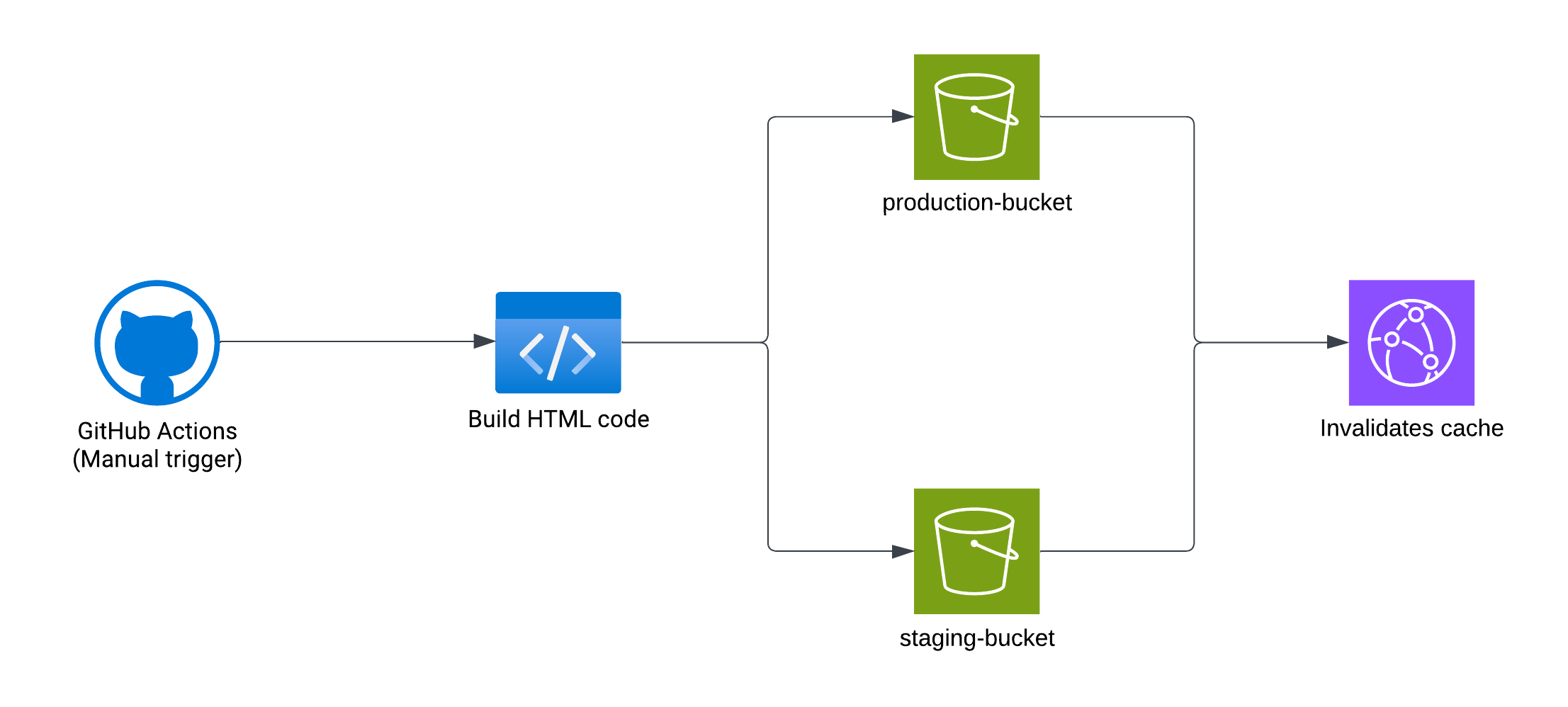

Use a different bucket per project

For simplicity purposes, I was trying to put all the contents from deployment within the same bucket. So, once I had to give every developer permissions to access it, it would have been simpler, since I had only to deal with a single resource.

My goal would be to create separate buckets for each environment, allowing me to manage developer permissions individually per resource and restrict production bucket access to only authorized company personnel.

If we really need an environment per developer or per Pull Request

If we want to have more environments out of the staging and production one, I would prefer to deploy those temporary environments into an NGINX container that serves as a web server, since waiting for the creation of a CloudFront distribution might be expensive in both monetary and time matters.

About Part 2

Part 2 will cover the backend, where most complexity resided. Since we had a deployment by containers, AWS ECS helped us reduce the maintenance of our containers.

I will attach the reference down below once this post is ready.